BBC Watch: Elon Musk says he is “disappointed” with Trump’s “big, beautiful bill”, in interview with CBS Sunday Morning Billionaire…

Read More

BBC Watch: Elon Musk says he is “disappointed” with Trump’s “big, beautiful bill”, in interview with CBS Sunday Morning Billionaire…

Read More

Chip designer Nvidia reported that revenues grew in the first quarter of the year, rising more than 69% from a…

Read More

Using AI chatbots to recreate the voice or likeness of a loved one who has passed away. Source link

Read More

The encrypted messaging service Telegram has entered into a partnership with Elon Musk’s artificial intelligence company, xAI. Announcing the deal…

Read More

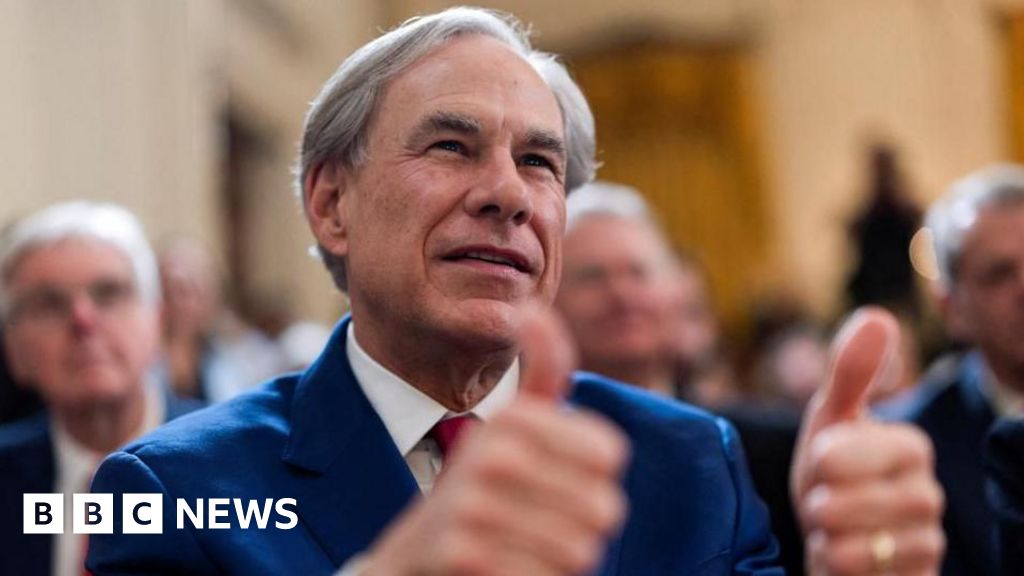

Texas Governor Greg Abbott has signed an online child safety bill that requires Apple and Google to ensure that their…

Read More

A second suspect has been arrested for allegedly kidnapping and torturing an Italian tourist in an upmarket Manhattan home for…

Read More

A new form of testing claims to predict how likely an unborn child is to develop diseases. Source link

Read More

Tom Gerken Technology reporter Getty Images Adidas has disclosed it’s been hit by a cyber attack in which customers’ personal…

Read More

Liv McMahon Technology reporter Getty Images The EU is investigating Pornhub, Stripchat and two other pornography websites it believes may…

Read More

Devina Gupta Business reporter Mansukh Prajapati An earthquake changed the life of Mansukh Prajapati For Mansukh Prajapati, childhood in the…

Read More